What is the relationship between mind and body?

Maybe the mind is like a video game controller, moving the

body around the world, taking it on joyrides. Or maybe the body manipulates the

mind with hunger, sleepiness, and anxiety, something like a river steering a

canoe. Is the mind like electromagnetic waves, flickering in and out of our lightbulb

bodies? Or is the mind a car on the road? A ghost in the machine?

اضافة اعلان

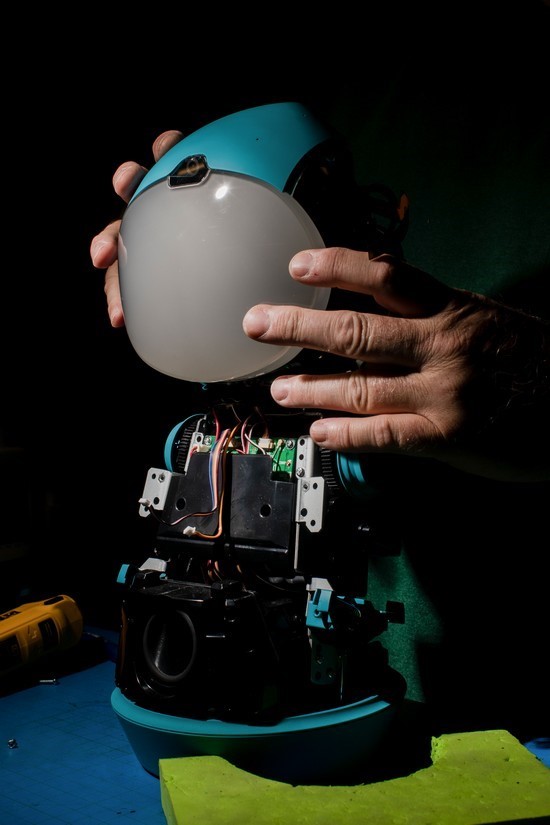

Robin Johnson, a

robot technician at Embodied, works on a Moxie unit at the company's lab in

Pasadena, California, April 6, 2023.

Robin Johnson, a

robot technician at Embodied, works on a Moxie unit at the company's lab in

Pasadena, California, April 6, 2023.

Maybe no metaphor will ever quite fit because there is no

distinction between mind and body. There is just experience, or some kind of

physical process, a gestalt.

These questions, agonized over by philosophers for

centuries, are gaining new urgency as sophisticated machines with artificial

intelligence begin to infiltrate society. Chatbots such as OpenAI’s GPT-4 and

Google’s Bard have minds, in some sense. Trained on vast troves of human

language, they have learned how to generate novel combinations of text, images

and even videos. When primed in the right way, they can express desires,

beliefs, hopes, intentions, love. They can speak of introspection and doubt,

self-confidence and regret.

But some AI researchers say that the technology will not

reach true intelligence, or true understanding of the world, until it works

with a body that can perceive, react to and feel around its environment. For

them, talk of disembodied intelligent minds is misguided, even dangerous. AI

that is unable to explore the world and learn its limits, in the ways that

children figure out what they can and cannot do, could make life-threatening

mistakes and pursue its goals at the risk of human welfare.

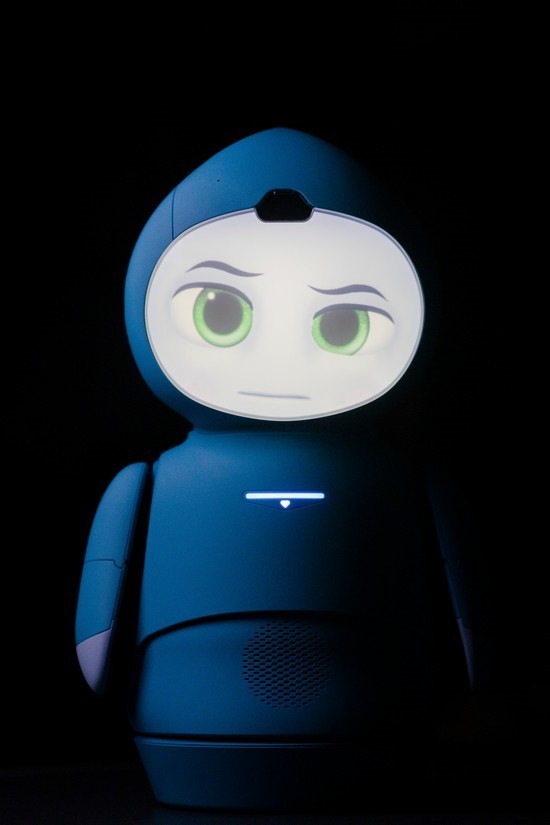

Moxie, which has

sensors that can take in visual cues and respond to your body language, at

Embodied’s lab in Pasadena, California, April 6, 2023.

Moxie, which has

sensors that can take in visual cues and respond to your body language, at

Embodied’s lab in Pasadena, California, April 6, 2023.

“The body, in a very simple way, is the foundation for

intelligent and cautious action,” said Joshua Bongard, a roboticist at the

University of Vermont. “As far as I can see, this is the only path to safe AI”

At a lab in Pasadena, California, a small team of engineers

has spent the past few years developing one of the first pairings of a large

language model with a body: a turquoise robot named Moxie. About the size of a

toddler, Moxie has a teardrop-shaped head, soft hands and alacritous green

eyes. Inside its hard plastic body is a computer processor that runs the same

kind of software as ChatGPT and GPT-4. Moxie’s makers, part of a startup called

Embodied, describe the device as “the world’s first AI robot friend.”

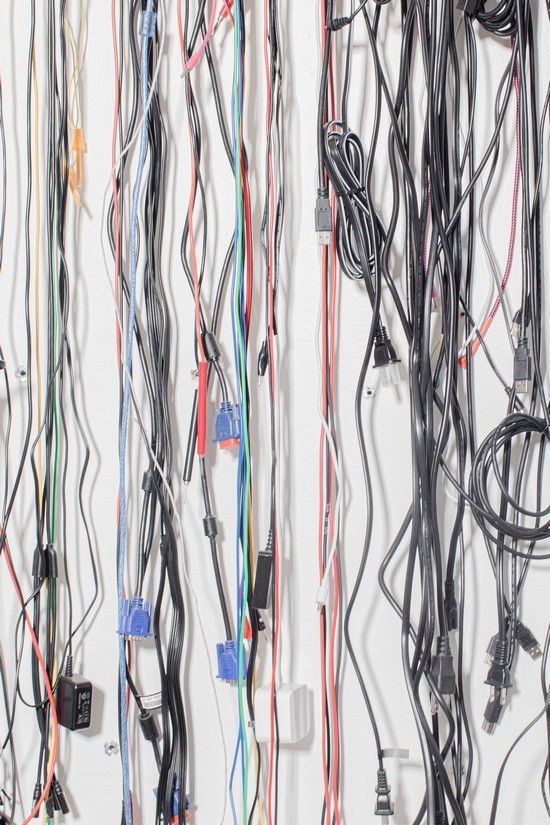

Power and computer

cables dangle from a wall at Embodied’s lab in Pasadena, California, April 6,

2023.

Power and computer

cables dangle from a wall at Embodied’s lab in Pasadena, California, April 6,

2023.

The bot was conceived, in 2017, to help children with

developmental disorders practice emotional awareness and communication skills.

When someone speaks to Moxie, its processor converts the sound into text and

feeds the text into a large language model, which in turn generates a verbal

and physical response. Moxie’s eyes can move to console you for the loss of

your dog, and it can smile to pump you up for school. The robot also has

sensors that take in visual cues and respond to your body language, mimicking

and learning from the behavior of people around it.

“It’s almost like this wireless communication between

humans,” said Paolo Pirjanian, a roboticist and the founder of Embodied. “You

literally start feeling it in your body.” Over time, he said, the robot gets

better at this kind of give and take, like a friend getting to know you.

Researchers at Alphabet, Google’s parent company, have taken

a similar approach to integrating large language models with physical machines.

In March, the company announced the success of a robot they called PaLM-E,

which was able to absorb visual features of its environment and information

about its own body position and translate it all into natural language. This

allowed the robot to represent where it was in space relative to other things

and eventually open a drawer and pick up a bag of chips.

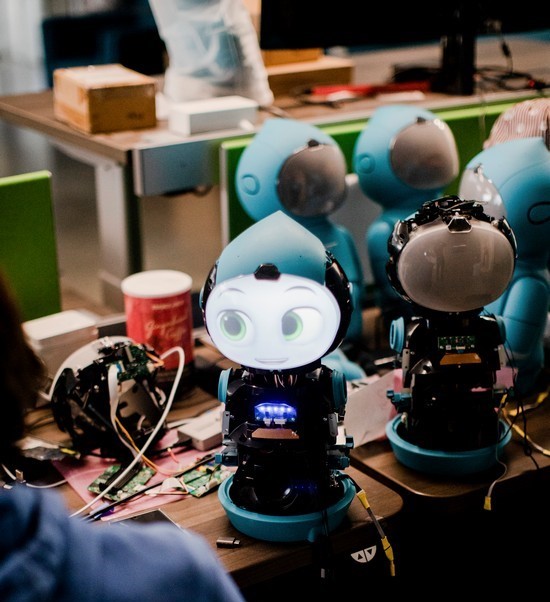

Units of Moxie, which

has sensors that can take in visual cues and respond to your body language, at

Embodied’s lab in Pasadena, California., April 6, 2023.

Units of Moxie, which

has sensors that can take in visual cues and respond to your body language, at

Embodied’s lab in Pasadena, California., April 6, 2023.

Robots of this kind, experts say, will be able to perform

basic tasks without special programming. They could ostensibly pour you a glass

of Coke, make you lunch or pick you up from the floor after a bad tumble, all

in response to a series of simple commands.

But many researchers doubt that the machines’ minds, when

structured in this modular way, will ever be truly connected to the physical

world and, therefore, will never be able to display crucial aspects of human

intelligence.

Boyuan Chen, a roboticist at Duke University who is working

on developing intelligent robots, pointed out that the human mind — or any

other animal mind, for that matter — is inextricable from the body’s actions in

and reactions to the real world, shaped over millions of years of evolution.

Human babies learn to pick up objects long before they learn language.

Robin Johnson, a

robot technician at Embodied, works on a Moxie unit at the company's lab in

Pasadena, California, April 6, 2023.

Robin Johnson, a

robot technician at Embodied, works on a Moxie unit at the company's lab in

Pasadena, California, April 6, 2023.

The artificially intelligent robot’s mind, in contrast, was

built entirely on language, and often makes common-sense errors that stem from

training procedures. It lacks a deeper connection between the physical and

theoretical, Chen said. “I believe that intelligence can’t be born without

having the perspective of physical embodiments.”

Bongard, of the University of Vermont, agreed. Over the past

few decades, he has developed small robots made of frog cells, called xenobots,

that can complete basic tasks and move around their environment. Although

xenobots look much less impressive than chatbots that can write original

haikus, they might actually be closer to the kind of intelligence we care

about.

An early iteration of

Moxie, which has sensors that can take in visual cues and respond to your body

language, at Embodied’s lab in Pasadena, California, April 6, 2023.

An early iteration of

Moxie, which has sensors that can take in visual cues and respond to your body

language, at Embodied’s lab in Pasadena, California, April 6, 2023.

“Slapping a body onto a brain, that’s not embodied

intelligence,” Bongard said. “It has to push against the world and observe the

world pushing back.”

He also believes that attempts to ground artificial

intelligence in the physical world are safer than alternative research

projects.

Some experts, including Pirjanian, recently conveyed concern

in a letter about the possibility of creating AI that could disinterestedly

steamroll humans in the pursuit of some goal (such as efficiently producing

paper clips), or that could be harnessed for nefarious purposes (such as

disinformation campaigns). The letter called for a temporary pause in the

training of models more powerful than GPT-4.

Units of Moxie, which

has sensors that can take in visual cues and respond to your body language, at

Embodied’s lab in Pasadena, California, April 6, 2023.

Units of Moxie, which

has sensors that can take in visual cues and respond to your body language, at

Embodied’s lab in Pasadena, California, April 6, 2023.

Bongard, as well as a number of other scientists in the

field, thought the letter calling for a pause in research could bring about

uninformed alarmism. But he is concerned about the dangers of our ever

improving technology and believes that the only way to suffuse embodied AI with

a robust understanding of its own limitations is to rely on the constant trial

and error of moving around in the real world.

Start with simple robots, he said, “and as they demonstrate

that they can do stuff safely, then you let them have more arms, more legs,

give them more tools.”

And maybe, with the help of a body, a real artificial mind

will emerge.

Read more Technology

Jordan News