SAN FRANCISCO — Naveen Rao, a neuroscientist turned tech

entrepreneur, once tried to compete with Nvidia, the world’s leading maker of

chips tailored for artificial intelligence.

اضافة اعلان

At a startup that the semiconductor giant Intel later

bought, Rao worked on chips intended to replace Nvidia’s graphics processing

units, which are components adapted for AI tasks like machine learning. But

while Intel moved slowly, Nvidia swiftly upgraded its products with new AI

features that countered what he was developing, Rao said.

After leaving Intel and leading a software startup,

MosaicML, Rao used Nvidia’s chips and evaluated them against those from rivals.

He found that Nvidia had differentiated itself beyond the chips by creating a

large community of AI programmers who consistently invent using the company’s

technology.

“Everybody builds on Nvidia first,” Rao said. “If you come

out with a new piece of hardware, you’re racing to catch up.”

Over more than 10 years, Nvidia has built a nearly

impregnable lead in producing chips that can perform complex AI tasks like

image, facial, and speech recognition, as well as generating text for chatbots

like ChatGPT. The one-time industry upstart achieved that dominance by

recognizing the AI trend early, tailoring its chips to those tasks and then

developing key pieces of software that aid in AI development.

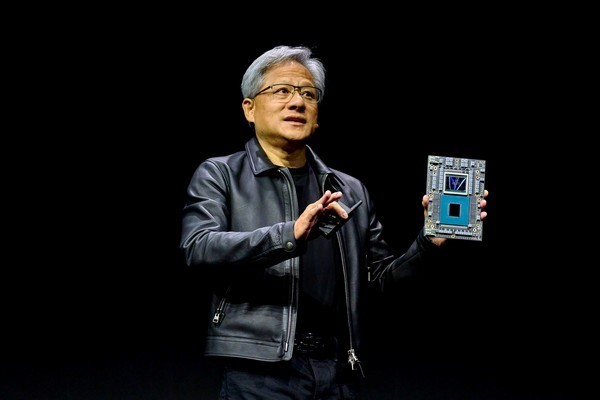

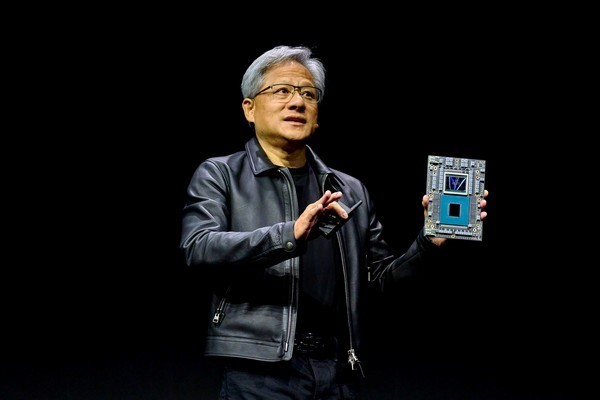

Jensen Huang,

Nvidia’s chief executive, speaks at a conference in Los Angeles on Aug. 8,

2023.

Jensen Huang,

Nvidia’s chief executive, speaks at a conference in Los Angeles on Aug. 8,

2023.

Jensen Huang, Nvidia’s co-founder and CEO, has since kept

raising the bar. To maintain its leading position, his company has also offered

customers access to specialized computers, computing services and other tools

of their emerging trade. That has turned Nvidia, for all intents and purposes,

into a one-stop shop for AI development.

While Google, Amazon, Meta, IBM, and others have also

produced AI chips, Nvidia today accounts for more than 70 percent of AI chip

sales and holds an even bigger position in training generative AI models,

according to the research firm Omdia.

In May, the company’s status as the most visible winner of

the AI revolution became clear when it projected a 64 percent leap in quarterly

revenue, far more than Wall Street had expected. On Wednesday, Nvidia — which

has surged past $1 trillion in market capitalization to become the world’s most

valuable chipmaker — is expected to confirm those record results and provide

more signals about booming AI demand.

“Customers will wait 18 months to buy an Nvidia system

rather than buy an available, off-the-shelf chip from either a startup or

another competitor,” said Daniel Newman, an analyst at Futurum Group. “It’s

incredible.”

Mustafa Suleyman in

Menlo Park, Calif., on May 2, 2023. Nvidia has invested in artificial intelligence start-ups including Inflection AI, which is run by Suleyman.

Mustafa Suleyman in

Menlo Park, Calif., on May 2, 2023. Nvidia has invested in artificial intelligence start-ups including Inflection AI, which is run by Suleyman.

Huang, 60, who is known for a trademark black leather

jacket, talked up AI for years before becoming one of the movement’s best-known

faces. He has publicly said computing is going through its biggest shift since

IBM defined how most systems and software operate 60 years ago. Now, he said,

GPUs and other special-purpose chips are replacing standard microprocessors,

and AI chatbots are replacing complex software coding.

“The thing that we understood is that this is a reinvention

of how computing is done,” Huang said in an interview. “And we built everything

from the ground up, from the processor all the way up to the end.”

Huang helped start Nvidia in 1993 to make chips that render

images in video games. While standard microprocessors excel at performing

complex calculations sequentially, the company’s GPUs do many simple tasks at

once.

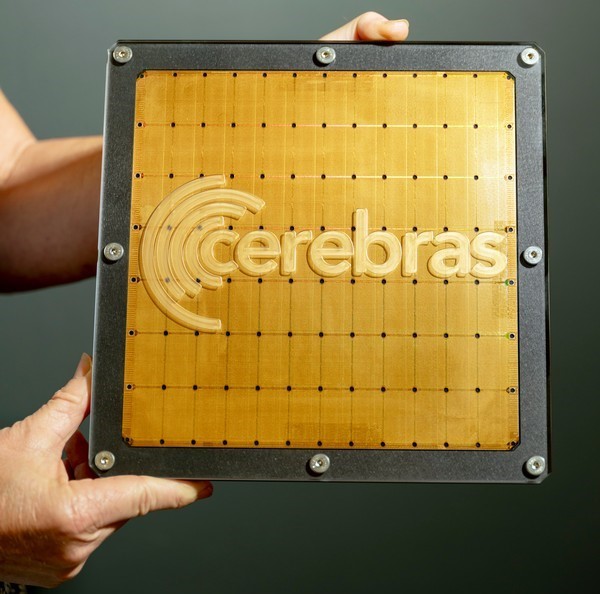

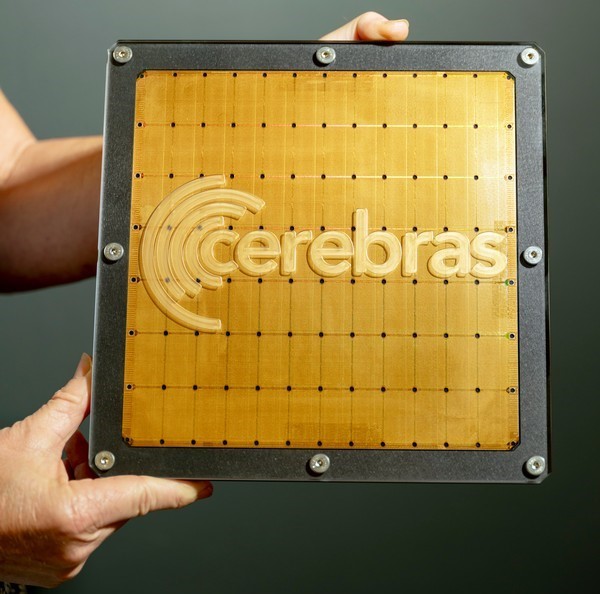

The second-generation

Wafer-Scale Engine (WSE-2), a chip tailored for artificial intelligence

manufactured by Cerebras, is displayed for a photograph at the company’s data

center in Santa Clara, Calif., on July 19, 2023. Cerebras built the chip to

compete with Nvidia.

The second-generation

Wafer-Scale Engine (WSE-2), a chip tailored for artificial intelligence

manufactured by Cerebras, is displayed for a photograph at the company’s data

center in Santa Clara, Calif., on July 19, 2023. Cerebras built the chip to

compete with Nvidia.

In 2006, Huang took that further. He announced software

technology called CUDA, which helped program the GPUs for new tasks, turning

them from single-purpose chips to more general-purpose ones that could take on

other jobs in fields like physics and chemical simulations.

A big breakthrough came in 2012, when researchers used GPUs

to achieve humanlike accuracy in tasks such as recognizing a cat in an image —

a precursor to recent developments like generating images from text prompts.

Nvidia responded by turning “every aspect of our company to

advance this new field,” Jensen recently said in a commencement speech at

National Taiwan University.

Andrew Feldman, the

chief executive of Cerebras, at the company’s data center in Santa Clara,

Calif., on July 19, 2023. Cerebras has built a large artificial intelligence

chip to compete with Nvidia.

The effort, which the company estimated has cost more than

$30 billion over a decade, made Nvidia more than a component supplier. Besides

collaborating with leading scientists and startups, the company built a team

that directly participates in AI activities like creating and training language

models.

Advance warning about what AI practitioners need led Nvidia

to develop many layers of key software beyond CUDA. Those included hundreds of

prebuilt pieces of code, called libraries, that save labor for programmers.

In hardware, Nvidia gained a reputation for consistently

delivering faster chips every couple of years. In 2017, it started tweaking

GPUs to handle specific AI calculations.

That same year, Nvidia, which typically sold chips or

circuit boards for other companies’ systems, also began selling complete

computers to carry out AI tasks more efficiently. Some of its systems are now

the size of supercomputers, which it assembles and operates using proprietary

networking technology and thousands of GPUs. Such hardware may run weeks to

train the latest AI models.

“This type of computing doesn’t allow for you to just build

a chip and customers use it,” Huang said in the interview. “You’ve got to build

the whole data center.”

Last September, Nvidia announced the production of new chips

named H100, which it enhanced to handle so-called transformer operations. Such

calculations turned out to be the foundation for services like ChatGPT, which

have prompted what Huang calls the “iPhone moment” of generative AI.

A photo provided by

Nvidia shows the company’s Grace Hopper chip, which combines GPUs with

internally developed microprocessors.

A photo provided by

Nvidia shows the company’s Grace Hopper chip, which combines GPUs with

internally developed microprocessors.

To further extend its influence, Nvidia has also recently

forged partnerships with Big Tech companies and invested in high-profile AI

startups that use its chips. One was Inflection AI, which in June announced

$1.3 billion in funding from Nvidia and others. The money was used to help

finance the purchase of 22,000 H100 chips.

Mustafa Suleyman, Inflection’s CEO, said there was no

obligation to use Nvidia’s products but competitors offered no viable

alternative. “None of them come close,” he said.

Nvidia has also directed cash and scarce H100s lately to

upstart cloud services such as CoreWeave, which allow companies to rent time on

computers rather than buying their own. CoreWeave, which will operate

Inflection’s hardware and owns more than 45,000 Nvidia chips, raised $2.3

billion in debt this month to help buy more.

Given the demand for its chips, Nvidia must decide who gets

how many of them. That power makes some tech executives uneasy.

“It’s really important that hardware doesn’t become a

bottleneck for AI or gatekeeper for AI,” said Clément Delangue, CEO of Hugging

Face, an online repository for language models that collaborates with Nvidia

and its competitors.

Some rivals said it was tough to compete with a company that

sold computers, software, cloud services and trained AI models, as well as processors.

“Unlike any other chip company, they have been willing to

openly compete with their customers,” said Andrew Feldman, CEO of Cerebras, a

startup that develops AI chips.

But few customers are complaining, at least publicly. Even

Google, which began creating competing AI chips more than a decade ago, relies

on Nvidia’s GPUs for some of its work.

Demand for Google’s own chips is “tremendous,” said Amin

Vahdat, a Google vice president and general manager of compute infrastructure.

But, he added, “we work really closely with Nvidia.”

Nvidia doesn’t discuss prices or chip allocation policies,

but industry executives and analysts said each H100 costs $15,000 to more than

$40,000, depending on packaging and other factors — roughly two to three times

more than the predecessor A100 chip.

Pricing “is one place where Nvidia has left a lot of room

for other folks to compete,” said David Brown, a vice president at Amazon’s

cloud unit, arguing that its own AI chips are a bargain compared with the

Nvidia chips it also uses.

Huang said his chips’ greater performance saved customers

money. “If you can reduce the time of training to half on a $5 billion data

center, the savings is more than the cost of all of the chips,” he said. “We

are the lowest-cost solution in the world.”

He has also started promoting a new product, Grace Hopper,

which combines GPUs with internally developed microprocessors, countering chips

that rivals say use much less energy for running AI services.

Still, more competition seems inevitable. One of the most

promising entrants in the race is a GPU sold by Advanced Micro Devices, said

Rao, whose startup was recently purchased by the data and AI company

DataBricks.

“No matter how anybody wants to say it’s all done, it’s not

all done,” Lisa Su, AMD’s CEO, said.

Read more Technology

Jordan News